From hunting for evidence of subatomic particles to speeding up the diagnosis of disease, generative artificial intelligence (AI) is transforming science and led to the 2024 Nobel Prize for Physics. Claire Malone explains why this rapidly developing technology could also – despite some misgivings – be good news for communicating science

In August 2024 the influential Australian popular-science magazine Cosmos found itself not just reporting the news – it had become the news. Owned by CSIRO Publishing – part of Australia’s national science agency – Cosmos had posted a series of “explainer” articles on its website that had been written by generative artificial intelligence (AI) as part of an experiment funded by Australia’s Walkley Foundation. Covering topics such as black holes and carbon sinks, the text had been fact-checked against the magazine’s archive of more than 15,000 past articles to negate the worry of misinformation, but at least one of the new articles contained inaccuracies.

Critics, such as the science writer Jackson Ryan, were quick to condemn the magazine’s experiment as undermining and devaluing high-quality science journalism. As Ryan wrote on his Substack blog, AI not only makes things up and trains itself on copyrighted material, but “for the most part, provides corpse-cold, boring-ass prose”. Contributors and former staff also complained to Australia’s ABC News that they’d been unaware of the experiment, which took place just a few months after the magazine had made five of its eight staff redundant.

It’s all too easy for AI to get things wrong and contribute to the deluge of online misinformation

The Cosmos incident is a reminder that we’re in the early days of using generative AI in science journalism. It’s all too easy for AI to get things wrong and contribute to the deluge of online misinformation, potentially damaging modern society in which science and technology shape so many aspects of our lives. Accurate, high-quality science communication is vital, especially if we are to pique the public’s interest in physics and encourage more people into the subject.

Kanta Dihal, a lecturer at Imperial College London who researchers the public’s understanding of AI, warns that the impacts of recent advances in generative AI on science communication are “in many ways more concerning than exciting”. Sure, AI can level the playing field by, for example, enabling students to learn video editing skills without expensive tools and helping people with disabilities to access course material in accessible formats. “[But there is also] the immediate large-scale misuse and misinformation,” Dihal says.

We do need to take these concerns seriously, but AI could benefit science communication in ways you might not realize. Simply put, AI is here to stay – in fact, the science behind it led to the physicist John Hopfield and computer scientist Geoffrey Hinton winning the 2024 Nobel Prize for Physics. So how can we marshal AI to best effect not just to do science but to tell the world about science?

Dangerous game

Generative AI is a step up from “machine learning”, where a computer predicts how a system will behave based on data it’s analysed. Machine learning is used in high-energy physics, for example, to model particle interactions and detector performance. It does this by learning to recognize patterns in existing data, before making predictions and then validating that those predictions match the original data. Machine learning saves researchers from having to manually sift through terabytes of data from experiments such as those at CERN’s Large Hadron Collider.

Generative AI, on the other hand, doesn’t just recognize and predict patterns – it can create new ones too. When it comes to the written word, a generative AI could, for example, invent a story from a few lines of input. It is exactly this language-generating capability that caused such a furore at Cosmos and led some journalists to worry that AI might one day make their jobs obsolete. But how does a generative AI produce replies that feel like a real conversation?

Perhaps the best known generative AI is ChatGPT (where GPT stands for generative pre-trained transformer), which is an example of a Large Language Model (LLM). Language modelling dates back to the 1950s, when the US mathematician Claude Shannon applied information theory – the branch of maths that deals with quantifying, storing and transmitting information – to human language. Shannon measured how well language models could predict the next word in a sentence by assigning probabilities to each word based on patterns in the data the model is trained on.

Such methods of statistical language modelling are now fundamental to a range of natural language processing tasks, from building spell-checking software to translating between languages and even recognizing speech. Recent advances in these models have significantly extended the capabilities of generative AI tools, with the “chatbot” functionality of ChatGPT making it especially easy to use.

ChatGPT racked up a million users within five days of its launch in November 2022 and since then other companies have unveiled similar tools, notably Google’s Gemini and Perplexity. With more than 600 million users per month as of September 2024, ChatGPT is trained on a range of sources, including books, Wikipedia articles and chat logs (although the precise list is not explicitly described anywhere). The AI spots patterns in the training texts and builds sentences by predicting the most likely word that comes next.

ChatGPT operates a bit like a slot machine, with probabilities assigned to each possible next word in the sentence. In fact, the term AI is a little misleading, being more “statistically informed guessing” than real intelligence, which explains why ChatGPT has a tendency to make basic errors or “hallucinate”. Cade Metz, a technology reporter from the New York Times, reckons that chatbots invent information as much as 27% of the time.

One notable hallucination occurred in February 2023 when Bard – Google’s forerunner to Gemini – declared in its first public demonstration that the James Webb Space Telescope (JWST) had taken “the very first picture of a planet outside our solar system”. As Grant Tremblay from the US Center for Astrophysics pointed out, this feat had been accomplished in 2004, some 16 years before the JWST was launched, by the European Southern Observatory’s Very Large Telescope in Chile.

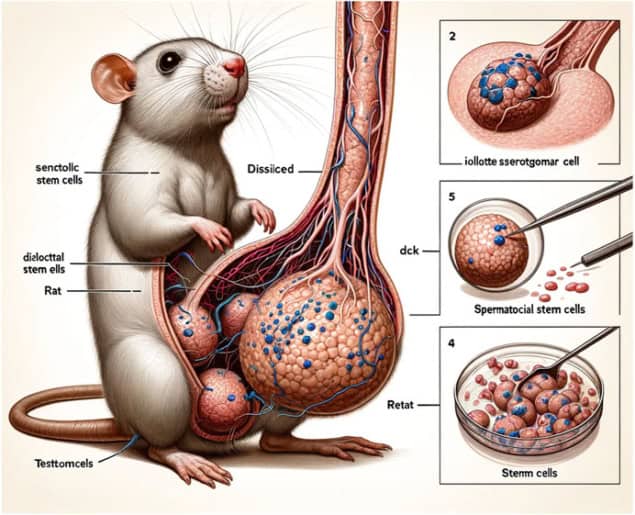

Another embarassing incident was the comically anatomically incorrect picture of a rat created by the AI image generator Midjourney, which appeared in a journal paper that was subsequently retracted. Some hallucinations are more serious. Amateur mushroom pickers, for example, have been warned to steer clear of online foraging guides, likely written by AI, that contain information running counter to safe foraging practices. Many edible wild mushrooms look deceptively similar to their toxic counterparts, making careful identification critical.

By using AI to write online content, we’re in danger of triggering a vicious circle of increasingly misleading statements, polluting the Internet with unverified output. What’s more, AI can perpetuate existing biases in society. Google, for example, was forced to publish an embarrassing apology, saying it would “pause” the ability to generate images with Gemini after the service was used to create images of racially diverse Nazi soldiers,

More seriously, women and some minority groups are under-represented in healthcare data, biasing the training set and potentially skewing the recommendations of predictive AI algorithms. One study led by Laleh Seyyed-Kalantari from the University of Toronto (Nature Medicine 27 2176) found that computer-aided diagnosis of chest X-rays are less accurate for Black patients than white patients.

Generative AI could even increase inequalities if it becomes too commercial. “Right now there’s a lot of free generative AI available, but I can also see that getting more unequal in the very near future,” Dihal warns. People who can afford to pay for ChatGPT subscriptions, for example, have access to versions of the AI based on more up-to-date training data. They therefore get better responses than users restricted to the “free” version.

Clear communication

But generative AI tools can do much more than churn out uninspired articles and create problems. One beauty of ChatGPT is that users interact with it conversationally, just like you’d talk to a human communicator at a science museum or science festival. You could start by typing something simple (such as “What is quantum entanglement?”) before delving into the details (e.g. “What kind of physical systems are used to create it?”). You’ll get answers that meet your needs better than any standard textbook.

Generative AI could also boost access to physics by providing an interactive way to engage with groups – such as girls, people of colour or students from low-income backgrounds – who might face barriers to accessing educational resources in more traditional formats. That’s the idea behind online tuition platforms such as Khan Academy, which has integrated a customized version of ChatGPT into its tuition services.

Instead of presenting fully formed answers to questions, its generative AI is programmed to prompt users to work out the solution themselves. If a student types, say, “I want to understand gravity” into Khan’s generative AI-powered tutoring program, the AI will first ask what the student already knows about the subject. The “conversation” between the student and the chatbot will then evolve in the light of the student’s response.

As someone with cerebral palsy, AI has transformed how I work by enabling me to turn my speech into text in an instant

AI can also remove barriers that some people face in communicating science, allowing a wider range of voices to be heard and thereby boosting the public’s trust in science. As someone with cerebral palsy, AI has transformed how I work by enabling me to turn my speech into text in an instant (see box below).

It’s also helped Duncan Yellowlees, a dyslexic research developer who trains researchers to communicate. “I find writing long text really annoying, so I speak it into OtterAI, which converts the speech into text,” he says. The text is sent to ChatGPT, which converts it into a blog. “So it’s my thoughts, but I haven’t had to write them down.”

Then there’s Matthew Tosh, a physicist-turned-science presenter specializing in pyrotechnics. He has a progressive disease, which meant he faced an increasing struggle to write in a concise way. ChatGPT, however, lets him create draft social-media posts, which he then rewrites in his own sites. As a result, he can maintain that all-important social-media presence while managing his disability at the same time.

Despite the occasional mistake made by generative AI bots, misinformation is nothing new. “That’s part of human behaviour, unfortunately,” Tosh admits. In fact, he thinks errors can – perversely – be a positive. Students who wrongly think a kilo of cannonballs will fall faster than a kilo of feathers create the perfect chance for teachers to discuss Newtonian mechanics. “In some respects,” says Tosh, “a little bit of misinformation can start the conversation.”

AI as a voice-to-text tool

As a science journalist – and previously as a researcher hunting for new particles in data from the ATLAS experiment at CERN – I’ve longed to use speech-to-text programs to complete assignments. That’s because I have a disability – cerebral palsy – that makes typing impractical. For a long time this meant I had to dictate my work to a team of academic assistants for many hours a week. But in 2023 I started using Voiceitt, an AI-powered app optimized for speech recognition for people with non-standard speech like mine.

You train the app by first reading out a couple of hundred short training phrases. It then deploys AI to apply thousands of hours of other non-standard speaker models in its database to optimize its training. As Voiceitt is used, it continues refining the AI model, improving speech recognition over time. The app also has a generative AI model to correct any grammatical errors created during transcription. Each week, I find myself correcting the app’s transcriptions less and less, which is a bonus when facing journalistic deadlines, such as the one for this article.

The perfect AI assistant?

One of the first news organizations to experiment with AI tools was Associated Press (AP), which in 2014 began automating routine financial stories about corporate earnings. AP now also uses AI to create transcripts of videos, write summaries of sports events, and spot trends in large stock-market data sets. Other news outlets use AI tools to speed up “back-office” tasks such as transcribing interviews, analysing information or converting data files. Tools such as MidJourney can even help journalists to brief professional illustrators to create images.

However, there is a fine line between using AI to speed up your workflow and letting it make content without human input. Many news outlets and writers’ associations have issued statements guaranteeing not to use generative AI as a replacement for human writers and editors. Physics World, for example, has pledged not to publish fresh content generated purely by AI, though the magazine does use AI to assist with transcribing and summarizing interviews.

So how can generative AI be incorporated into the effective and trustworthy communication of science? First, it’s vital to ask the right question – in fact, composing a prompt can take several attempts to get the desired output. When summarizing a document, for example, a good prompt should include the maximum word length, an indication of whether the summary should be in paragraphs or bullet points, and information about the target audience and required style or tone.

Generative AI is here to stay – and science communicators and journalists are still working out how best to use it to communicate science

Second, information obtained from AI needs to be fact checked. It can easily hallucinate, making a chatbot like an unreliable (but occasionally brilliant) colleague who can get the wrong end of the stick. “Don’t assume that whatever the tool is, that it is correct,” says Phil Robinson, editor of Chemistry World. “Use it like you’d use a peer or colleague who says ‘Have you tried this?’ or ‘Have you thought of that?’”

Finally, science communicators must be transparent in explaining how they used AI. Generative AI is here to stay – and science communicators and journalists are still working out how best to use it to communicate science. But if we are to maintain the quality of science journalism – so vital for the public’s trust in science – we must continuously evaluate and manage how AI is incorporated into the scientific information ecosystem.

Generative AI can help you say what you want to say. But as Dihal concludes: “It’s no substitute for having something to say.”

This post was originally published on here