The original version of this story appeared in Quanta Magazine.

If you’ve been making the same commute for a long time, you’ve probably settled on what seems like the best route. But “best” is a slippery concept. Perhaps one day there’s an accident or road closure, and your fastest route becomes the slowest.

Scenarios like this are also a challenge for researchers who develop algorithms, the step-by-step procedures that computers use to solve problems. Many different algorithms can solve any given problem, and the question of which is best can be frustratingly ambiguous.

For example, imagine an algorithm that’s designed to find the fastest route between two points. There are lots of possible ways to design such an algorithm so that it doesn’t fail. A successful algorithm will always return the fastest route, whether you use it in London or Los Angeles, and whether it’s rush hour or the middle of the night.

But those algorithms aren’t all the same. The time each one takes to find the right answer will vary depending on where and when it’s used, and cases that are hard for one algorithm may be easy for another. Ideally, you’d want an algorithm that always runs faster than the others.

For most problems, it’s simply not possible to find such a unicorn. But a new proof shows that for the quintessential path-finding problem, one algorithm is close to ideal: Assuming worst-case traffic patterns, it’s the best approach on every possible street grid. What’s more, the algorithm is nearly 70 years old and a staple of the undergraduate computer science curriculum. The new work will be presented with a best-paper award at the 2024 Symposium on Foundations of Computer Science next week.

“It’s amazing,” said Tim Roughgarden, a computer scientist at Columbia University. “I can’t imagine a more compelling research paper about a problem we teach students in undergrad algorithms.”

Heaps and Bounds

The story of this iconic path-finding algorithm began with a detour. In 1956, the 26-year-old Dutch computer scientist Edsger Dijkstra wanted to write a program that would show off the capabilities of a brand-new computer called the ARMAC. While shopping with his fiancée in Amsterdam, he stopped in at a café for a break. That’s when he hit on the idea for the algorithm that now bears his name. He didn’t have writing materials on hand, so over the course of 20 minutes he worked out the details in his head.

In an interview toward the end of his life, Dijkstra credited his algorithm’s enduring appeal in part to its unusual origin story. “Without pencil and paper you are almost forced to avoid all avoidable complexities,” he said.

Dijkstra’s algorithm doesn’t just tell you the fastest route to one destination. Instead, it gives you an ordered list of travel times from your current location to every other point that you might want to visit—a solution to what researchers call the single-source shortest-paths problem. The algorithm works in an abstracted road map called a graph: a network of interconnected points (called vertices) in which the links between vertices are labeled with numbers (called weights). These weights might represent the time required to traverse each road in a network, and they can change depending on traffic patterns. The larger a weight, the longer it takes to traverse that path.

To get a sense of Dijkstra’s algorithm, imagine yourself wandering through a graph, writing down the travel time from your starting point to each new vertex on a piece of scratch paper. Whenever you have a choice about which direction to explore next, head toward the closest vertex you haven’t visited yet. If you discover a faster route to any vertex, jot down the new time and cross out the old one. When you’re sure that you’ve found the fastest path, move the travel time from your notes to a separate, more presentable list.

“It’s a great algorithm,” said Erik Demaine, a computer scientist at the Massachusetts Institute of Technology. “It’s very fast, simple, and easy to implement.”

To put this procedure into practice, you’d need to decide on a system for organizing your notes—a data structure, in the lingo of computer science. That may sound like a minor technical detail, but time spent searching through your notes whenever you need to edit or remove an entry can have a big effect on the overall runtime of the algorithm.

Dijkstra’s paper used a simple data structure that left room for improvement. In the following decades, researchers developed better ones, affectionately dubbed “heaps,” in which certain items are easier to find than others. They take advantage of the fact that Dijkstra’s algorithm only ever needs to remove the entry for the closest remaining vertex. “A heap is basically a data structure that allows you to do this very quickly,” said Václav Rozhoň, a researcher at the Institute for Computer Science, Artificial Intelligence and Technology (INSAIT) in Sofia, Bulgaria.

In 1984, two computer scientists developed a clever heap design that enabled Dijkstra’s algorithm to reach a theoretical limit, or “lower bound,” on the time required to solve the single-source shortest-paths problem. In one specific sense, this version of Dijkstra’s algorithm is the best possible. That was the last word on the standard version of the problem for nearly 40 years. Things only changed when a few researchers took a closer look at what it means to be “best.”

Best Behavior

Researchers typically compare algorithms by studying how they fare in worst-case scenarios. Imagine the world’s most confusing street grid, then add some especially perplexing traffic patterns. If you insist on finding the fastest routes in these extreme circumstances, the 1984 version of Dijkstra’s algorithm is provably unbeatable.

But hopefully, your city doesn’t have the world’s worst street grid. And so you may ask: Is there an algorithm that’s unbeatable on every road network? The first step to answering this question is to make the conservative assumption that each network has worst-case traffic patterns. Then you want your algorithm to find the fastest paths through any possible graph layout, assuming the worst possible weights. Researchers call this condition “universal optimality.” If you had a universally optimal algorithm for the simpler problem of just getting from one point on a graph to another, it could help you beat rush hour traffic in every city in the world.

“This sounds too good to be true,” said Bernhard Haeupler, a computer scientist affiliated with INSAIT and the Swiss Federal Institute of Technology Zurich (ETH Zurich).

Haeupler became fascinated with the promise of universal optimality while writing a grant proposal in the mid-2010s. Many researchers find that part of the job tedious, but Haeupler saw it as an opportunity. “It allows you to throw your skepticism out and just dream big,” he said.

Those dreams came to fruition in 2021, when Haeupler and two graduate students proved that it was possible to build universally optimal algorithms for several important graph problems. He didn’t think to ask whether the same condition was achievable for the classic single-source shortest-paths problem. That would have to wait until a different graduate student dared to dream big.

The Shortest Path to Victory

In early 2023, Rozhoň was at the tail end of his graduate program at ETH Zurich. He had just finished a paper about going beyond worst-case analysis in a different context, and he was brainstorming new ideas to pursue with his coauthor Jakub Tětek, then a graduate student at the University of Copenhagen. Rozhoň suggested they try to devise a universally optimal algorithm for the single-source shortest-paths problem.

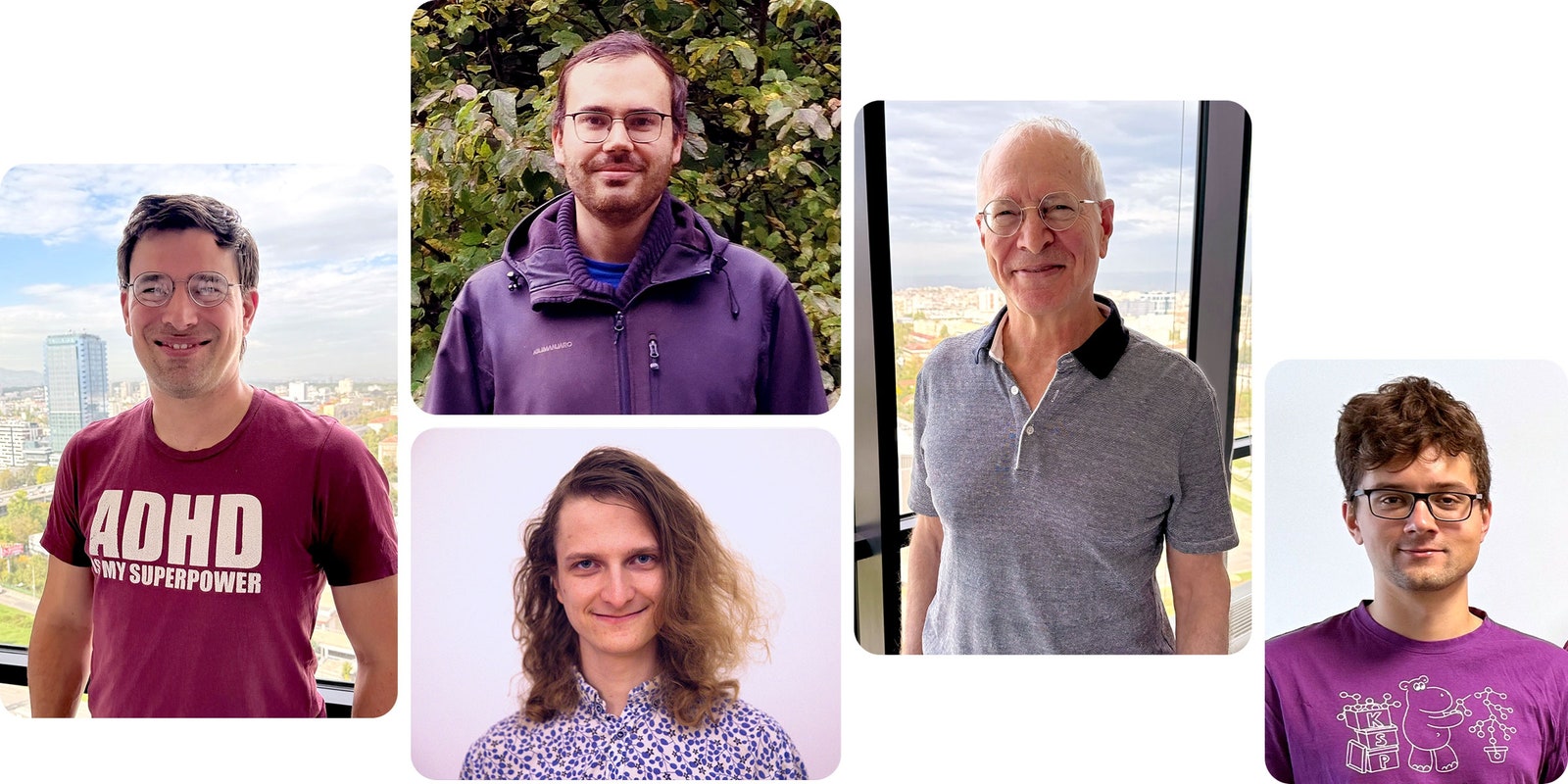

“I said, ‘No, but that’s not possible; that just cannot be done,’” Tětek recalled. But Rozhoň convinced him to give it a try. In the spring, the team grew to three with the addition of Richard Hladík, a graduate student at ETH Zurich whom Rozhoň and Tětek had met when all three were high schoolers in the Czech Republic.

The trio tinkered with many different aspects of Dijkstra’s algorithm and the heap that it used, and they managed to kludge together a universally optimal variant. But the resulting algorithm was complicated, and they couldn’t pinpoint what conditions were actually necessary for universal optimality. In a field that thrives on comprehensive and rigorous proofs, this wasn’t enough.

The three students would turn from mathematical networks to social ones. Rozhoň had begun discussing the problem with Haeupler while both were visiting colleagues in New York. From there, Haeupler flew to Panama for a holiday, but he wasn’t quite ready to set the problem aside.

“It really was vacation,” he said. “But then also, thinking doesn’t stop.”

During a Zoom call a few days into Haeupler’s trip, the team of four settled on a new approach. They decided to focus mainly on the choice of data structure. Soon, they began to suspect that that would be enough—they could just leave the rest of Dijkstra’s algorithm intact. Within a month, they’d proved it.

The key ingredient turned out to be a special property of some data structures that lets them quickly access recently added items. Heaps with this property were first constructed more than 20 years ago, but in all the years that followed, nobody made full use of it. The four researchers proved that they only needed to construct a data structure with this new property and all the other features of the 1984 heap. All they needed to do now was design it.

The last person to join the team was Robert Tarjan, a computer scientist at Princeton University who was one of the inventors of that special 1984 heap. Tarjan had won the Turing Award, considered the highest honor in the field, and had also been a mentor to Haeupler in the late 2000s. When Tarjan visited Zurich in May, Haeupler invited him over for fondue—his specialty—and mentioned the new shortest-paths project. Tarjan was immediately in.

The five researchers set to work developing a heap data structure with all the properties they needed. They started with an unwieldy design and improved it bit by bit until they were finally satisfied. “Every time we looked at it, we were able to simplify a little bit,” Rozhoň said. “I was actually surprised how simple it was in the end.”

Some variants of Dijkstra’s algorithm have seen real-world use in software like Google Maps. The new result probably won’t have such practical applications, for which there are many considerations beyond theoretical optimality guarantees. But it may change how researchers study optimality, prompting them to look beyond the usual worst-case analysis. Often, algorithms only achieve stronger guarantees at the cost of added complexity. The new result suggests that simple algorithms with these stronger guarantees might be more widespread than researchers previously thought—the team has already identified two other examples.

“The general concept is very compelling,” Tarjan said. “It remains to be seen how far one can take this.”

This post was originally published on here