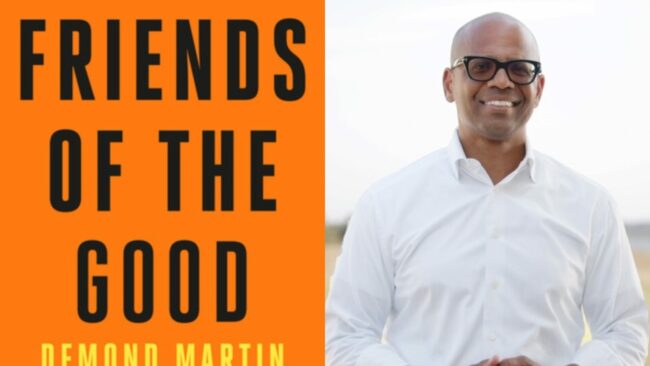

ESSENCE AUTHORS: Demond Martin’s New Book ‘Friends Of The Good’ Reveals The Power Of Chosen Family

… this philosophy with his new book, Friends of the Good. Published by … . “It’s not a self-help book; it’s an honest story of … to intentional storytelling by publishing books that strongly resonate with Black …